Dionne discussed the upcoming game for the Chicago Bulls as they prepared to face...

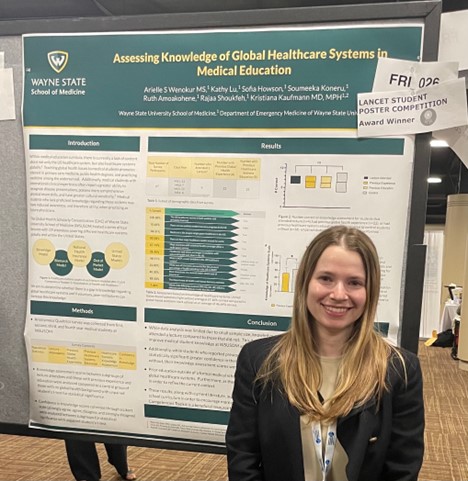

Arielle Wenokur, a medical student at Wayne State University, recently won top honors for...

Paul Toulouse, the director of Systovi, a French photovoltaic panel producer, is facing overwhelming...

In today’s fast-paced business world, data and insights are no longer just useful tools...

The historic trial of former US President Donald Trump resumed in New York, with...

In a report on the state of the video game industry, IIDEA provides an...

The University of Jamestown, ranked No. 9, successfully defended their home court in the...

The government has recently received recommendations from a special inter-ministerial commission regarding the format...

In a major development for the Spanish automotive industry, Chinese automobile group Chery has...

Seritage Growth Properties (NYSE: SRG) was significantly impacted by the COVID-19 pandemic, leading to...